In a digital world where children are turning to mobiles and tablets to learn, develop, grow and entertain themselves, the idea of a safe “world of learning and fun, made just for kids” from YouTube Kids sounds like an ideal suggestion to parents.

And why shouldn’t it be? There’s nothing wrong with letting your child watch a video or play a game while you do the washing up. However, the recent trend of disturbing videos making their way onto YouTube Kids reveals a sinister side to an app that is supposed to keep children safe online.

A Rise in Mobile Technology Usage From 0-8 Year Olds

So how widespread is the app, and the technology it can be accessed on?

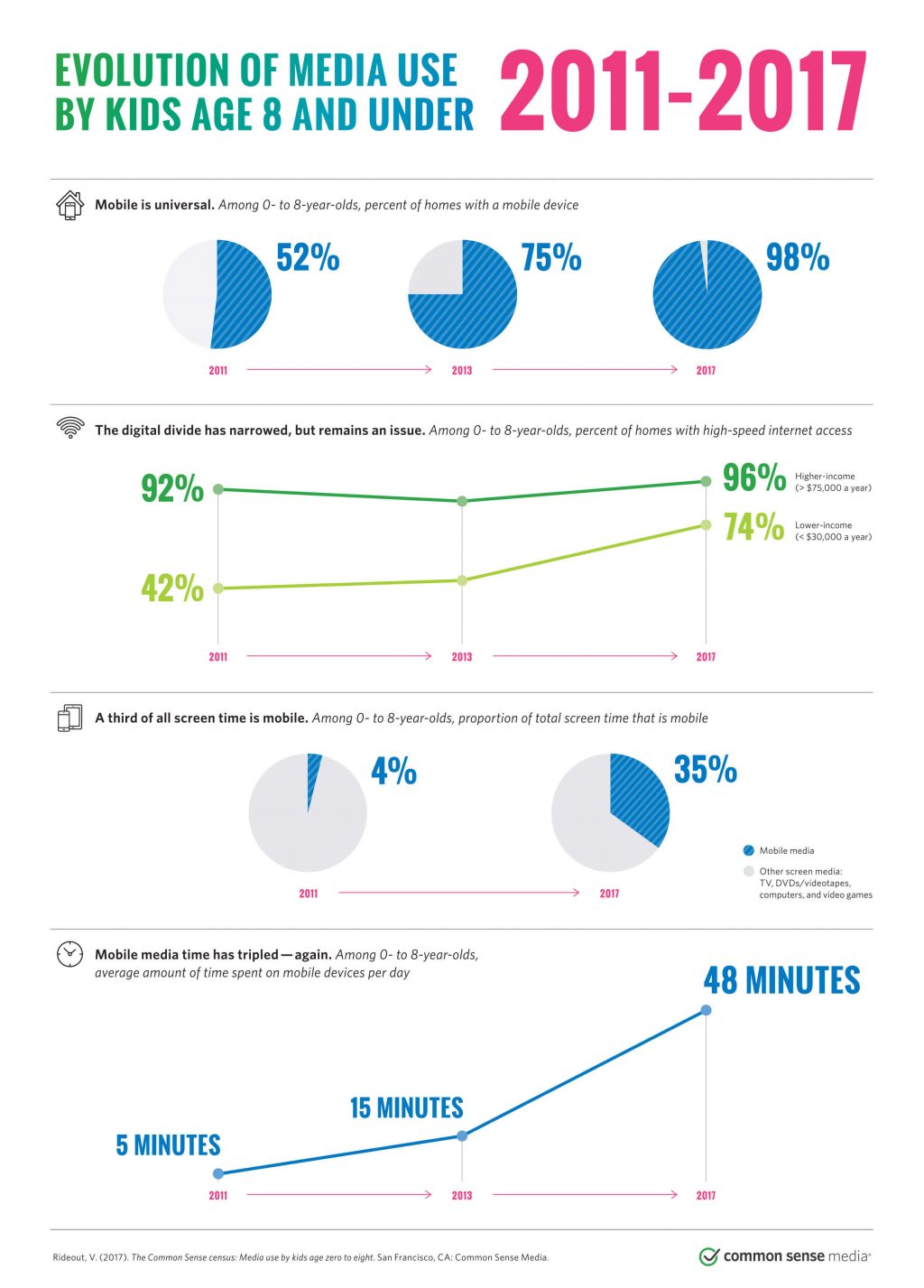

Well, firstly, according to data from The Common Sense Census, 98% of 0-8 year olds lived in a home with a mobile device in 2017. That’s a staggering 46% increase since 2011, revealing that mobile technology use is still on the rise, if nearly saturated.

Also in 2017 among 0-8 year olds, the proportion of screen time spent on mobile devices equated to over a third – a dramatic leap from 4% in 2011 to 35% in 2017.

What’s more, the full summary released by Common Sense Media showed that 78% of 0-8 year olds also live in a home with a tablet, rapidly increasing the potential access to a screen. 42% even own their own tablet, a 41% increase between 2011 and 2017.

As for YouTube Kids, the Google Play store estimates between 10 million and 50 million devices have it installed. Apple don’t choose to share install numbers on the App Store, but we can safely assume it to be in a similar ballpark – meaning there are minimum of 20 million versions of the app out there.

This easy access to technology and video naturally means that children are spending more and more time online. Whilst the internet can be informative and educational, parents need to be more aware than ever, that it also has the potential to expose children to explicit content if not filtered out correctly.

The battle against offensive or harmful content has been waged for many years now. Recent enforcements to reduce explicit content from minors have been planned, with legislation from the Government planning to hand more power to Internet Service Providers in an attempt to filter out explicit content.

An article in The Telegraph recently reinforced this sentiment, with a spokesperson for the Department of Culture, Media and Sport quoted as saying:

“We are committed to keeping children safe from harmful pornographic content on the internet and this amendment will give internet service providers reassurance the family friendly filters they currently offer are compliant with EU law.”

YouTube Kids Proves Ineffective Against Warped Videos

Unfortunately, despite attempts to keep children safe online, a recent controversy from YouTube Kids saw a flurry of accounts publish disturbing videos online, going unnoticed for months before erupting into a storm of outrage.

Some videos were autoplayed based on keywords and recently searched videos and involved popular ‘safe’ characters such as Peppa Pig in horrific scenes. Other content included disgusting themes or bordered on child exploitation and abuse, featuring children appearing visibly upset and distressed.

Whilst the internet has always had darker corners where twisted content is available, the most shocking fact about YouTube Kids is how these videos were not caught by YouTube’s own filtering features. Worse still, some videos which revealed violence against children came from “verified” accounts such as Toyfreaks and Mister Tisha.

Perhaps most concerning of all is how easily the videos could be accessed by children. The intentionally devious use of keywords and branded images that appeared innocent (such as the Peppa Pig content) meant that it was all too easy to stumble across a disturbing video – referred to by some as “gaming the algorithm”.

Once just one video had been viewed, the slippery slope of algorithms would then recommend or autoplay similar content, which could trap a child in a traumatic cycle of inappropriate content. In many ways, it was YouTube’s own automated systems being used against them.

The failure of YouTube Kids to protect children from harmful online content has led to many parents seeking different platforms for child-friendly content, one popular recent option being Jellies – an alternative video app made for parents that claims to be ad-free and fully moderated.

YouTube Toughens Approach to Child Protection

In fairness to YouTube, they have fought against harmful content for years, and shortly after the controversy, unveiled additional methods of flagging, moderating and removing harmful content.

According to USA Today, CEO Susan Wojcicki said that YouTube will increase the number of people working to oversee content to more than 10,000 next year – rather than simply relying on complex machine learning and algorithms. She added:

“Human reviewers remain essential to both removing content and training machine learning systems because human judgment is critical to making contextualized decisions on content.”

Additionally, the official YouTube blog revealed a five-step process of amplified measures to further fight against inappropriate content. The steps include:

-

1

Tougher application of our Community Guidelines and faster enforcement through technology.

-

2

Removing ads from inappropriate videos targeting families.

-

3

Blocking inappropriate comments on videos featuring minors.

-

4

Providing guidance for creators who make family-friendly content.

-

5

Engaging and learning from experts.

The above measures, if used properly, should begin to help prevent content that could endanger a child. According to the same blog post, in one week YouTube Kids shut down more than 50 channels that featured distressing content, even if that wasn’t the intent or purpose of the content. However, there are clearly plenty more out there that need to be shut down quickly.

YouTube Kids has also pledged to remove any advertising featuring family entertainment characters engaged in violent, offensive, or inappropriate behavior, as well as providing guidance to those who want to create enriching, family friendly content.

Ultimately, YouTube Kids has taken responsibility for the failure of its filters, but there is no suitable replacement for parents remaining vigilant. Though algorithms, human reviewers and a flagging system can reduce the amount of inappropriate content, it’s not safe to fully rely on these measures. As difficult and as time-consuming as it can be, parents should always be aware of what content is accessible by their children – it’s really the only way to be sure.

Thankfully, there are plenty of apps out there to aid parents in keeping a closer eye on their young children’s internet access. For more support and information on parental controls and how to apply them please visit our Parental Safety section.